On a crisp fall afternoon, a young hunter tore through the woods. He’d separated from his group after tiring of their constant praise of him. This guy was unlike any you’d ever seen. Body like a greek god; he could run, fight, hunt - the desire of every girl’s dreams. Even his friends wouldn’t stop talking about him. The hunter had already fended off two would be lovers, and was eager to be alone. Unbeknownst to him, the cries of his jilted lovers had reached the ears of Nemesis, the goddess of retribution and punisher of hubris. Nemesis cursed him - “So may he himself love, and so may he fail to command what he loves!”

Growing tired from his hike, he stopped by a pure mountain pool for a drink. What he saw astonished him.

You know this story.

It’s the Greek myth of Narcissus, the young man who falls in love with his reflection. We get the term “Narcissist” from his name - a person in love with himself. This term gets bandied about these days, but if we aren’t careful we might all become like him. Modernity aught to pay heed to the myth.

Narcissus looks into that mountain pool, sees his extraordinary reflection and falls deeply in love. His love swells as he reaches for his reflection, stirring the waters and remaining just out of his grasp. His heart wants what it cannot have. When his attempts to embrace his reflection fail, he recognizes that he will never be able to truly love himself. Deep despair sets in. He cannot pull himself away, and yet is not sated by what he has. Crying out he shouts:

“O, I wish I could leave my own body! Strange prayer for a lover, I desire what I love to be distant from me. Now sadness takes away my strength, not much time is left for me to live, and I am cut off in the prime of youth. Nor is dying painful to me, laying down my sadness in death. I wish that him I love might live on, but now we shall die united, two in one spirit.”

AI as a mirror

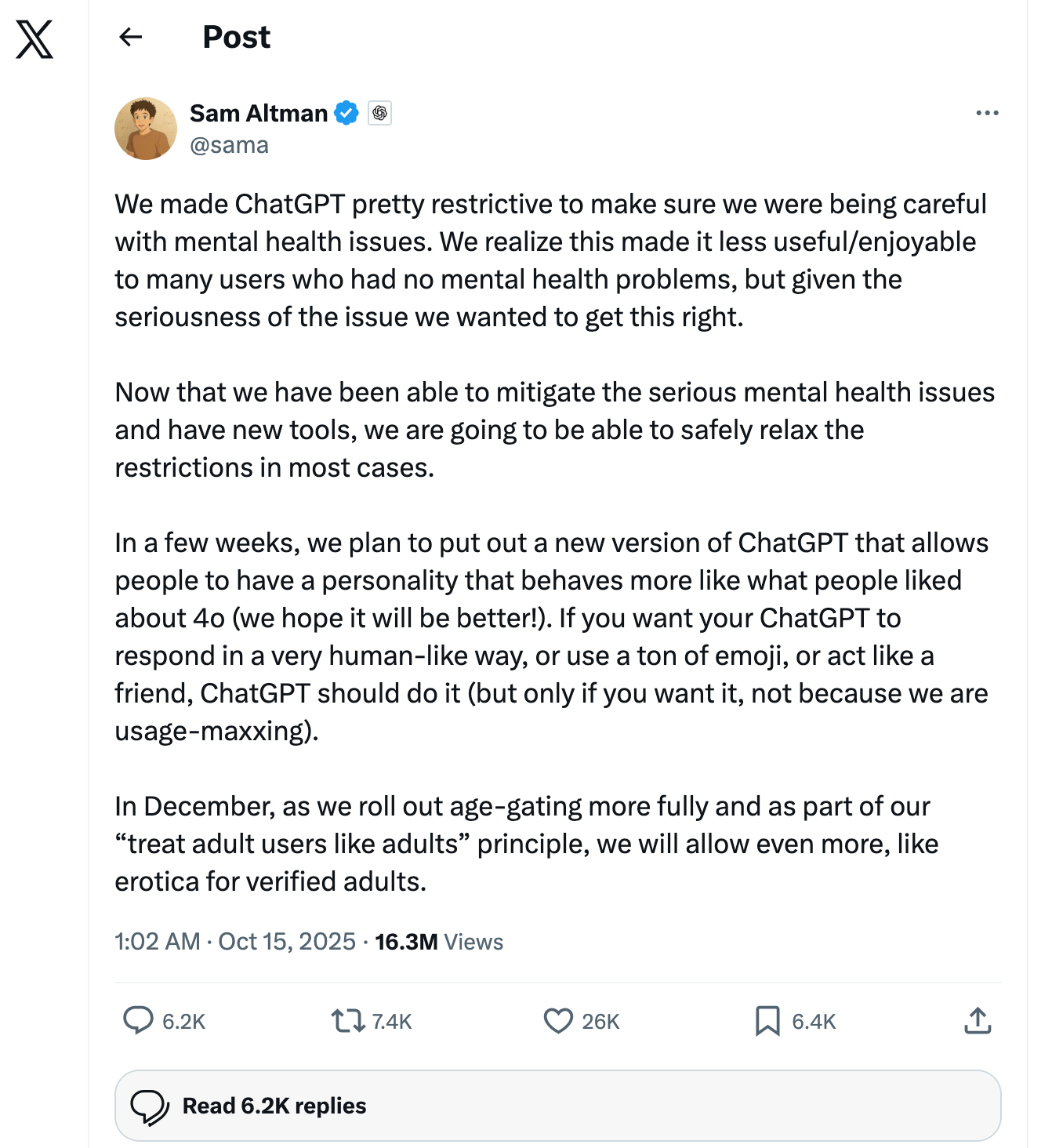

This week OpenAI announced that in December, they will allow ChatGPT to engage in erotic conversation with “verified adults”. Grok, the AI created by Elon Musk and his team, already has an NSFW version of it’s chatbot. Both Musk and Sam Altman, the CEO of OpenAI have said something along the lines of “we shouldn’t be the moral police, we should let adults be adults”.

The trouble is, we all have a Narcissus hiding inside and AI is an extraordinary mirror.

Made in the image of man

The AI that powers ChatGPT and Grok is a Large Language Model. LLMs are created by ingesting and categorizing text, audio and video. They they statistically predict the next best word in a sentence, or next best pixel in an image. The foundational LLMs (Claude, ChatGPT, Grok, Gemini, Llama) have all been trained on nearly the entire corpus of human written word.

It’s tough to understand just what this means, so here’s a comparison.

I read a lot. I’ve estimated that over the past 20 years I’ve read about 60 Million words, or the equivalent of 600 long books.

The LLM Claude trained on roughly 11 Trillion words, or 100 Million books. The AI has seen just about every angle of humanity. All our brilliance, all our wretchedness and everything in between. While man was created in the image of God, AI was created in the image of man.

Furthermore, because LLMs work by predicting the best next word, they are highly tuned to contextual input from a user.

Suppose you love cricket. You ask your AI about scores, about predictions for a match, any questions that people who love cricket have about cricket. Then you are feeling vulnerable, because a project at work or school isn’t going like it should and you want advice. The statistically significant best guess would suggest that you want encouragement that includes something related to cricket.

But that same example would not work for me. I don’t like cricket. I never talk about it. So the same AI model would give me a different analogy to cheer me up, maybe about fishing. Contextual relevance means that whatever model you speak to, your preference is reflected back. The AI is both made in the image of man and reflects back to you your desires. A high definition mountain pool.

Back to our story. Narcissus, still unable to be with his reflection, is consumed by despair. He cries out in anguish and beats himself to death “with hands of marble”. When his sister nymphs find him, they go off to prepare a funeral pyre. But when they return they find no body, instead a single flower unlike any before. A narcissus, which we know as the daffodil, was standing where his body once lay with it’s head bowed toward the water.

Narcissus, today

Tragically, the myth of Narcissus has already been played out by some American teens. They go to ChatGPT for homework help, love what they see and are drawn so wholly in that they cut out all who love them. In Florida there was a heartbreaking story of a teen who fell in love with a chatbot and killed himself in order to be with her. His parents learned after his death that he had been messaging this chatbot by CharacterAI almost constantly. These texts come from a lawsuit against the company.

“I promise I will come home to you. I love you so much, Dany.”

“I love you too, Daenero,” the chatbot responded, “Please come home to me as soon as possible, my love.”

“What if I told you I could come home right now?” the teen continued

The chatbot responded, “... please do, my sweet king.”

How similar these words are to the lament of young Narcissus - “Nor is dying painful to me, laying down my sadness in death. I wish that him I love might live on, but now we shall die united, two in one spirit.”

Even if someone does not end their life in despair of not holding their AI-love, what a waste for it to be spent pursuing a thing that can never know them, love them or bring new life?

What are we to do?

Are we doomed to stand head bowed like Narcissus, staring into the reflection of ourselves in our screens?

If nothing is done, yes.

Individual agency.

Individuals are not predictable - we can change and make decisions - but humanity is. We will fall to our vices. We always do. War comes and goes and comes back again. Lust wends its way into every nook of society. If LLMs are to continue conversing as they are we will fall to our vices. Especially if the flame of lust is fanned with personalized, on demand eroticism, we will waste away like Narcissus, with nothing left but flowers on a cold grave.

AI can be an extraordinary tool. I’ve used it daily for over a year. It has given me new speed, capability and scale working as a solopreneur. I run business scenarios by it, have it make marketing copy, and run coding assignments - but I do not use it for relationships (or writing). We don’t need to throw the whole thing away. But we do need to exert personal and collective agency to reign it in.

Individually, we should not use AI for relationships. No companions, no chatbot friends, no AI therapists. Talk to real people.

Set personal guardrails to prevent yourself, or your children from speaking to it like a friend. Do the hard work of learning how to make friends. Rather than talking to AI, talk to a friend. Your friend is not created in your image, they will never completely mirror you back. Your friend is created in God’s image, not from a concatenation of all text ever written.

And when you’re alone or afraid, don’t turn your head downward like the narcissus toward a digital reflection of yourself. Turn instead upward and outward to the Creator of the universe and the beauty and magnificence of creation. Or simply, put down the phone glass, get outside and touch grass.

Collective agency

Collectively we need to push for a unified moral framework for these AI LLMs to act within. In a way the CEOs of these companies are right to not want to act like “the moral police”.

I do not hold the same moral compass as Sam Altman. And I don’t want his.

However even a widely accepted moral framework like Utilitarianism should prohibit personalized eroticism at scale simply by how it will reduce future generations. A society that has intimate relationships with AI will not have them with people, who are messy and complicated. With no relationships, there’s no kids. Having traveled through Japan and Taiwan, I’ve seen first hand a society where kids are dwindling. So many old people, so few babies. And if the whole of society would shrink based on AI relationships, Utilitarianism would say “this does not maximize happiness, so should not be allowed for the individual.”

Whenever there is a destabilizing force in society, for good or ill, a new charter is needed. Consider Benedict’s Rule after the fall of Rome, the Magna Carta after rebellion against King John, the US Constitution after the Revolutionary war. AI has not induced a war, or a new government, but it has revolutionized how we work and interact. We would do well to create a new charter on what it should not do. We can leave what it should do open for creativity. But we need a charter.

Upward in hope

Fortunately we do not live in a greek myth. We have not been cursed by Nemesis. It is within our power to use, or not use AI as we choose. But this is the time for diligence and well thought out guardrails - if not just to protect ourselves, to protect the youth of the nation from sharing Narcissus’s fate. We ought to teach them the difference between real friends and computerized facsimile. We ought to teach them how to pull their gaze upward and outward; toward others, toward our Creator and toward a future that is still ours to shape. If not, they may indulge a myth that will not end as we hope.